20 July, 2022

A Review Of Top Quality DOP-C01 Free Dumps

Act now and download your Amazon-Web-Services DOP-C01 test today! Do not waste time for the worthless Amazon-Web-Services DOP-C01 tutorials. Download Update Amazon-Web-Services AWS Certified DevOps Engineer- Professional exam with real questions and answers and begin to learn Amazon-Web-Services DOP-C01 with a classic professional.

Also have DOP-C01 free dumps questions for you:

Question 1

You are planning on using AWS Code Deploy in your AWS environment. Which of the below features of AWS Code Deploy can be used to Specify scripts to be run on each instance at various stages of the deployment process

Question 2

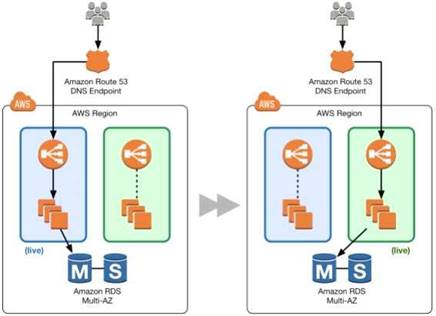

You have a web application hosted on EC2 instances. There are application changes which happen to the web application on a quarterly basis. Which of the following are example of Blue Green deployments which can be applied to the application? Choose 2 answers from the options given below

Question 3

Which of the following are ways to secure data at rest and in transit in AWS. Choose 3 answers from the options given below

Question 4

You have defined a Linux based instance stack in Opswork. You now want to attach a database to the Opswork stack. Which of the below is an important step to ensure that the application on the Linux instances can communicate with the database

Question 5

You have carried out a deployment using Elastic Beanstalk with All at once method, but the application is unavailable. What could be the reason for this

Question 6

You need the absolute highest possible network performance for a cluster computing application. You already selected homogeneous instance types supporting 10 gigabit enhanced networking, made sure that your workload was network bound, and put the instances in a placement group. What is the last optimization you can make?

Question 7

You have created a DynamoDB table for an application that needs to support thousands of users. You need to ensure that each user can only access their own data in a particular table. Many users already have accounts with a third-party identity provider, such as Facebook, Google, or Login with Amazon. How would you implement this requirement?

Choose 2 answers from the options given below.

Choose 2 answers from the options given below.

Question 8

If you're trying to configure an AWS Elastic Beanstalk worker tier for easy debugging if there are problems finishing queue jobs, what should you configure?

Question 9

Your company has the requirement to set up instances running as part of an Autoscaling Group. Part of the requirement is to use Lifecycle hooks to setup custom based software's and do the necessary configuration on the instances. The time required for this setup might take an hour, or might finish before the hour is up. How should you setup lifecycle hooks for the Autoscaling Group. Choose 2 ideal actions you would include as part of the lifecycle hook.

Question 10

Your application uses Cloud Formation to orchestrate your application's resources. During your testing phase before the application went live, your Amazon RDS instance type was changed and caused the instance to be re-created, resulting In the loss of test data. How should you prevent this from occurring in the future?

Question 11

Which of the following run command types are available for opswork stacks? Choose 3 answers from the options given below.

Question 12

You need to deploy a new application version to production. Because the deployment is high-risk, you need to roll the new version out to users over a number of hours, to make sure everything is working correctly. You need to be able to control the proportion of users seeing the new version of the application down to the percentage point. You use ELB and EC2 with Auto Scaling Groups and custom AMIs with your code pre-installed assigned to Launch Configurations. There are no data base- level changes during your deployment. You have been told you cannot spend too much money, so you must not increase the number of EC2 instances much at all during the deployment, but you also need to be able to switch back to the original version of code quickly if something goes wrong. What is the best way to meet these requirements?

Question 13

Which of the following is false when it comes to using the Elastic Load balancer with Opsworks stacks?

Question 14

Your serverless architecture using AWS API Gateway, AWS Lambda, and AWS DynamoDB experienced a large increase in traffic to a sustained 3000 requests per second, and dramatically increased in failure rates. Your requests, during normal operation, last 500 milliseconds on average. Your DynamoDB table did not exceed 50% of provisioned throughput, and Table primary keys are designed correctly. What is the most likely issue?

Question 15

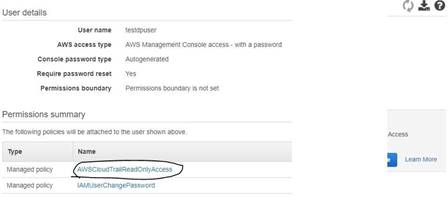

An audit is going to be conducted for your company's AWS account. Which of the following steps will ensure that the auditor has the right access to the logs of your AWS account

Question 16

You run accounting software in the AWS cloud. This software needs to be online continuously during the day every day of the week, and has a very static requirement for compute resources. You also have other, unrelated batch jobs that need to run once per day at anytime of your choosing. How should you minimize cost?

Question 17

You meet once per month with your operations team to review the past month's data. During the meeting, you realize that 3 weeks ago, your monitoring system which pings over HTTP from outside AWS recorded a large spike in latency on your 3-tier web service API. You use DynamoDB for the database layer, ELB, EBS, and EC2 for the business logic tier, and SQS, ELB, and EC2 for the presentation layer. Which of the following techniques will NOT help you figure out what happened?

Question 18

Which of the following services along with Cloudformation helps in building a Continuous Delivery release practice

Question 19

There is a requirement for a vendor to have access to an S3 bucket in your account. The vendor already has an AWS account. How can you provide access to the vendor on this bucket.

Question 20

Your company has an on-premise Active Directory setup in place. The company has extended their footprint on AWS, but still want to have the ability to use their on-premise Active Directory for authentication. Which of the following AWS services can be used to ensure that AWS resources such as AWS Workspaces can continue to use the existing credentials stored in the on-premise Active Directory.

Question 21

You are using Elastic beanstalk to deploy an application that consists of a web and application server. There is a requirement to run some python scripts before the application version is deployed to the web server. Which of the following can be used to achieve this?

Question 22

Which of these is not an instrinsic function in AWS CloudFormation?

Question 23

Which of the following service can be used to provision ECS Cluster containing following components in an automated way:

1) Application Load Balancer for distributing traffic among various task instances running in EC2 Instances

2) Single task instance on each EC2 running as part of auto scaling group

3) Ability to support various types of deployment strategies

1) Application Load Balancer for distributing traffic among various task instances running in EC2 Instances

2) Single task instance on each EC2 running as part of auto scaling group

3) Ability to support various types of deployment strategies

Question 24

You have a legacy application running that uses an m4.large instance size and cannot scale with Auto Scaling, but only has peak performance 5% of the time. This is a huge waste of resources and money so your Senior Technical Manager has set you the task of trying to reduce costs while still keeping the legacy application running as it should. Which of the following would best accomplish the task your manager has set you? Choose the correct answer from the options below

Question 25

Which of the following is a reliable and durable logging solution to track changes made to your AWS resources?