04 September, 2023

All About Practical DP-203 Training Tools

we provide Guaranteed Microsoft DP-203 sample question which are the best for clearing DP-203 test, and to get certified by Microsoft Data Engineering on Microsoft Azure. The DP-203 Questions & Answers covers all the knowledge points of the real DP-203 exam. Crack your Microsoft DP-203 Exam with latest dumps, guaranteed!

Also have DP-203 free dumps questions for you:

Question 1

- (Exam Topic 3)

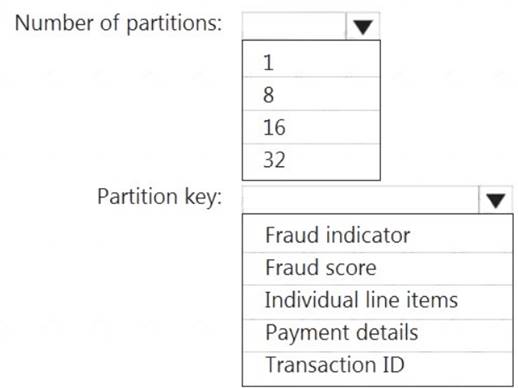

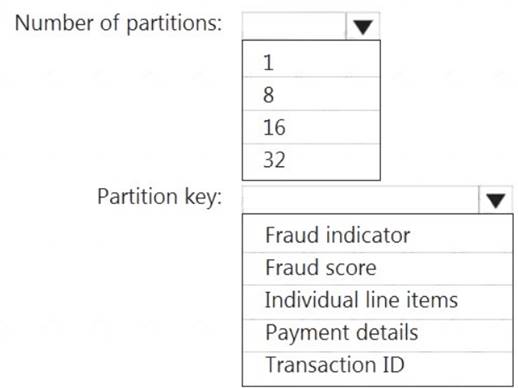

You have an Azure event hub named retailhub that has 16 partitions. Transactions are posted to retailhub. Each transaction includes the transaction ID, the individual line items, and the payment details. The transaction ID is used as the partition key.

You are designing an Azure Stream Analytics job to identify potentially fraudulent transactions at a retail store. The job will use retailhub as the input. The job will output the transaction ID, the individual line items, the payment details, a fraud score, and a fraud indicator.

You plan to send the output to an Azure event hub named fraudhub.

You need to ensure that the fraud detection solution is highly scalable and processes transactions as quickly as possible.

How should you structure the output of the Stream Analytics job? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: 16

For Event Hubs you need to set the partition key explicitly.

An embarrassingly parallel job is the most scalable scenario in Azure Stream Analytics. It connects one partition of the input to one instance of the query to one partition of the output.

Box 2: Transaction ID Reference:

https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-features#partitions

Does this meet the goal?

You have an Azure event hub named retailhub that has 16 partitions. Transactions are posted to retailhub. Each transaction includes the transaction ID, the individual line items, and the payment details. The transaction ID is used as the partition key.

You are designing an Azure Stream Analytics job to identify potentially fraudulent transactions at a retail store. The job will use retailhub as the input. The job will output the transaction ID, the individual line items, the payment details, a fraud score, and a fraud indicator.

You plan to send the output to an Azure event hub named fraudhub.

You need to ensure that the fraud detection solution is highly scalable and processes transactions as quickly as possible.

How should you structure the output of the Stream Analytics job? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: 16

For Event Hubs you need to set the partition key explicitly.

An embarrassingly parallel job is the most scalable scenario in Azure Stream Analytics. It connects one partition of the input to one instance of the query to one partition of the output.

Box 2: Transaction ID Reference:

https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-features#partitions

Does this meet the goal?

Question 2

- (Exam Topic 3)

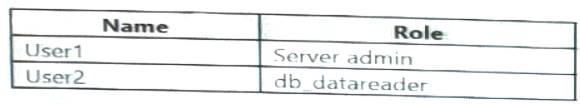

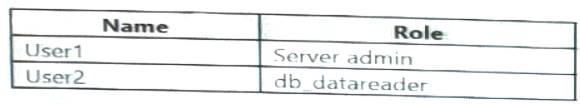

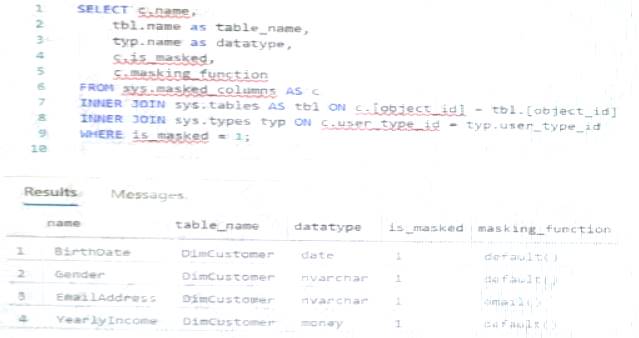

You have an Azure Synapse Analytics dedicated SQL pool that contains the users shown in the following table.

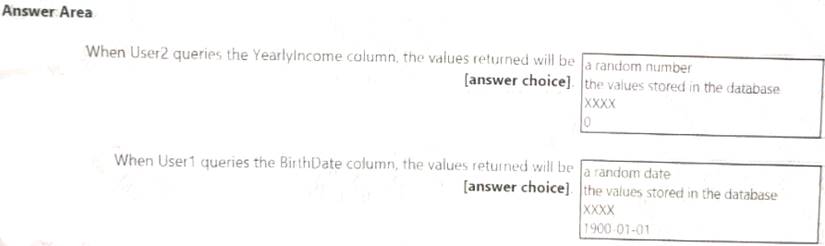

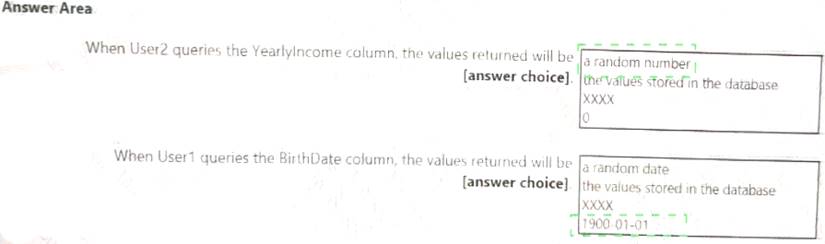

User1 executes a query on the database, and the query returns the results shown in the following exhibit.

User1 is the only user who has access to the unmasked data.

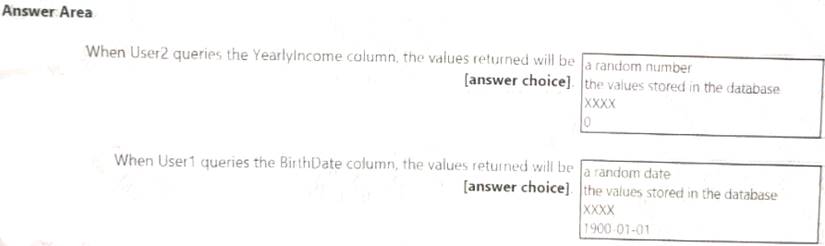

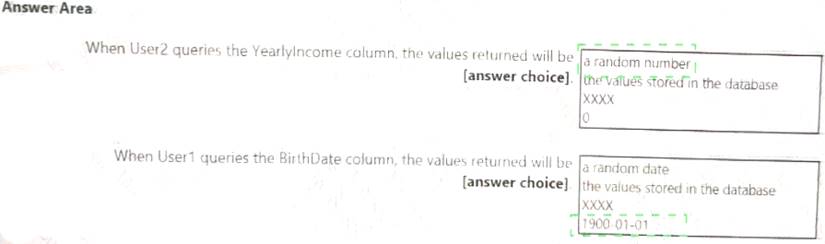

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Solution:

Does this meet the goal?

You have an Azure Synapse Analytics dedicated SQL pool that contains the users shown in the following table.

User1 executes a query on the database, and the query returns the results shown in the following exhibit.

User1 is the only user who has access to the unmasked data.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Solution:

Does this meet the goal?

Question 3

- (Exam Topic 3)

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are designing an Azure Stream Analytics solution that will analyze Twitter data.

You need to count the tweets in each 10-second window. The solution must ensure that each tweet is counted only once.

Solution: You use a hopping window that uses a hop size of 5 seconds and a window size 10 seconds. Does this meet the goal?

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are designing an Azure Stream Analytics solution that will analyze Twitter data.

You need to count the tweets in each 10-second window. The solution must ensure that each tweet is counted only once.

Solution: You use a hopping window that uses a hop size of 5 seconds and a window size 10 seconds. Does this meet the goal?

Question 4

- (Exam Topic 3)

You are designing an enterprise data warehouse in Azure Synapse Analytics that will contain a table named Customers. Customers will contain credit card information.

You need to recommend a solution to provide salespeople with the ability to view all the entries in Customers. The solution must prevent all the salespeople from viewing or inferring the credit card information.

What should you include in the recommendation?

You are designing an enterprise data warehouse in Azure Synapse Analytics that will contain a table named Customers. Customers will contain credit card information.

You need to recommend a solution to provide salespeople with the ability to view all the entries in Customers. The solution must prevent all the salespeople from viewing or inferring the credit card information.

What should you include in the recommendation?

Question 5

- (Exam Topic 3)

You have an Azure Synapse Analytics serverless SQL pool named Pool1 and an Azure Data Lake Storage Gen2 account named storage1. The AllowedBlobpublicAccess porperty is disabled for storage1.

You need to create an external data source that can be used by Azure Active Directory (Azure AD) users to access storage1 from Pool1.

What should you create first?

You have an Azure Synapse Analytics serverless SQL pool named Pool1 and an Azure Data Lake Storage Gen2 account named storage1. The AllowedBlobpublicAccess porperty is disabled for storage1.

You need to create an external data source that can be used by Azure Active Directory (Azure AD) users to access storage1 from Pool1.

What should you create first?

Question 6

- (Exam Topic 3)

You are designing an inventory updates table in an Azure Synapse Analytics dedicated SQL pool. The table will have a clustered columnstore index and will include the following columns:

• EventDate: 1 million per day

• EventTypelD: 10 million per event type

• WarehouselD: 100 million per warehouse

• ProductCategoryTypeiD: 25 million per product category type You identify the following usage patterns:

Analyst will most commonly analyze transactions for a warehouse.

Queries will summarize by product category type, date, and/or inventory event type. You need to recommend a partition strategy for the table to minimize query times. On which column should you recommend partitioning the table?

You are designing an inventory updates table in an Azure Synapse Analytics dedicated SQL pool. The table will have a clustered columnstore index and will include the following columns:

• EventDate: 1 million per day

• EventTypelD: 10 million per event type

• WarehouselD: 100 million per warehouse

• ProductCategoryTypeiD: 25 million per product category type You identify the following usage patterns:

Analyst will most commonly analyze transactions for a warehouse.

Queries will summarize by product category type, date, and/or inventory event type. You need to recommend a partition strategy for the table to minimize query times. On which column should you recommend partitioning the table?

Question 7

- (Exam Topic 3)

You are designing a statistical analysis solution that will use custom proprietary1 Python functions on near real-time data from Azure Event Hubs.

You need to recommend which Azure service to use to perform the statistical analysis. The solution must minimize latency.

What should you recommend?

You are designing a statistical analysis solution that will use custom proprietary1 Python functions on near real-time data from Azure Event Hubs.

You need to recommend which Azure service to use to perform the statistical analysis. The solution must minimize latency.

What should you recommend?

Question 8

- (Exam Topic 3)

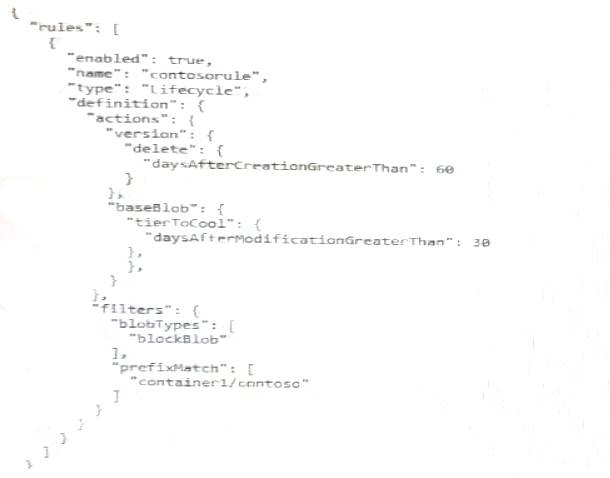

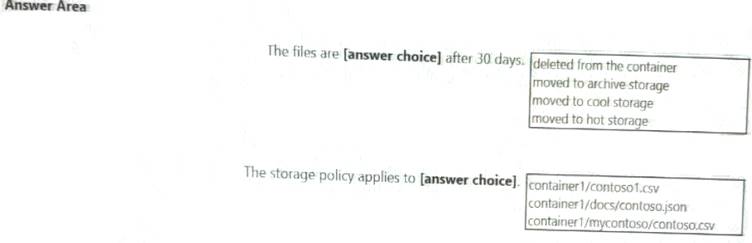

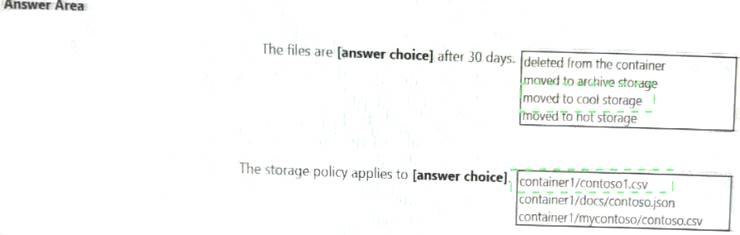

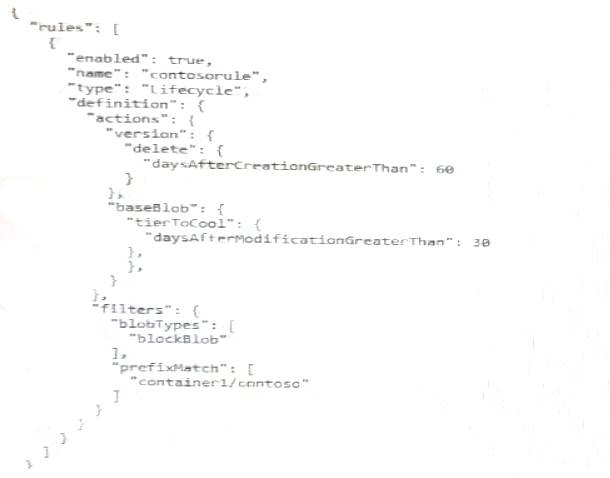

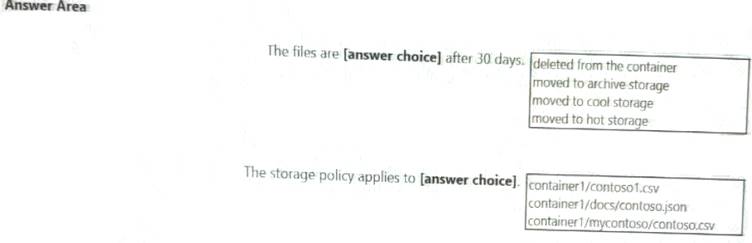

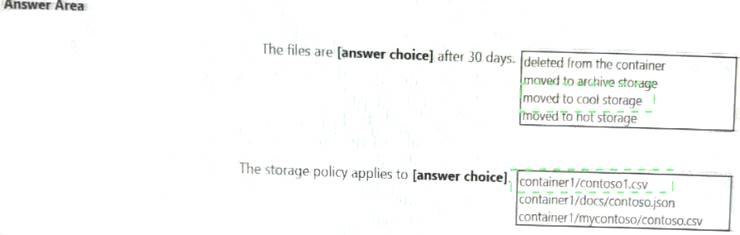

You store files in an Azure Data Lake Storage Gen2 container. The container has the storage policy shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection Is worth one point.

Solution:

Does this meet the goal?

You store files in an Azure Data Lake Storage Gen2 container. The container has the storage policy shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection Is worth one point.

Solution:

Does this meet the goal?

Question 9

- (Exam Topic 3)

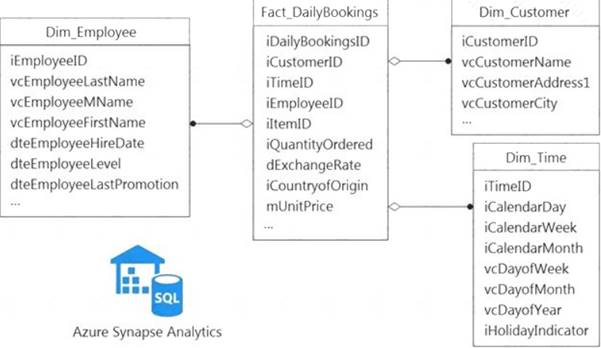

You have a self-hosted integration runtime in Azure Data Factory.

The current status of the integration runtime has the following configurations:

Status: Running

Status: Running

Type: Self-Hosted

Type: Self-Hosted

Version: 4.4.7292.1

Version: 4.4.7292.1

Running / Registered Node(s): 1/1

Running / Registered Node(s): 1/1

High Availability Enabled: False

High Availability Enabled: False

Linked Count: 0

Linked Count: 0

Queue Length: 0

Queue Length: 0

Average Queue Duration. 0.00s

Average Queue Duration. 0.00s

The integration runtime has the following node details:

Name: X-M

Name: X-M

Status: Running

Status: Running

Version: 4.4.7292.1

Version: 4.4.7292.1

Available Memory: 7697MB

Available Memory: 7697MB

CPU Utilization: 6%

CPU Utilization: 6%

Network (In/Out): 1.21KBps/0.83KBps

Network (In/Out): 1.21KBps/0.83KBps

Concurrent Jobs (Running/Limit): 2/14

Concurrent Jobs (Running/Limit): 2/14

Role: Dispatcher/Worker

Role: Dispatcher/Worker

Credential Status: In Sync

Credential Status: In Sync

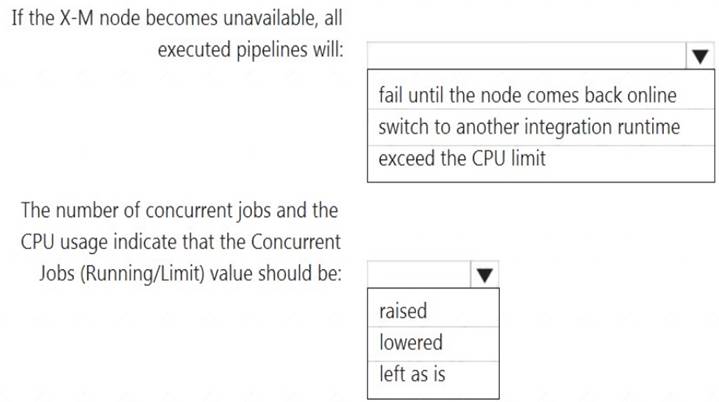

Use the drop-down menus to select the answer choice that completes each statement based on the information presented.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: fail until the node comes back online We see: High Availability Enabled: False

Note: Higher availability of the self-hosted integration runtime so that it's no longer the single point of failure in your big data solution or cloud data integration with Data Factory.

Box 2: lowered We see:

Concurrent Jobs (Running/Limit): 2/14 CPU Utilization: 6%

Note: When the processor and available RAM aren't well utilized, but the execution of concurrent jobs reaches a node's limits, scale up by increasing the number of concurrent jobs that a node can run

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

Does this meet the goal?

You have a self-hosted integration runtime in Azure Data Factory.

The current status of the integration runtime has the following configurations:

Status: Running

Status: Running Type: Self-Hosted

Type: Self-Hosted  Version: 4.4.7292.1

Version: 4.4.7292.1 Running / Registered Node(s): 1/1

Running / Registered Node(s): 1/1  High Availability Enabled: False

High Availability Enabled: False Linked Count: 0

Linked Count: 0 Queue Length: 0

Queue Length: 0 Average Queue Duration. 0.00s

Average Queue Duration. 0.00sThe integration runtime has the following node details:

Name: X-M

Name: X-M Status: Running

Status: Running Version: 4.4.7292.1

Version: 4.4.7292.1 Available Memory: 7697MB

Available Memory: 7697MB  CPU Utilization: 6%

CPU Utilization: 6% Network (In/Out): 1.21KBps/0.83KBps

Network (In/Out): 1.21KBps/0.83KBps  Concurrent Jobs (Running/Limit): 2/14

Concurrent Jobs (Running/Limit): 2/14  Role: Dispatcher/Worker

Role: Dispatcher/Worker Credential Status: In Sync

Credential Status: In SyncUse the drop-down menus to select the answer choice that completes each statement based on the information presented.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: fail until the node comes back online We see: High Availability Enabled: False

Note: Higher availability of the self-hosted integration runtime so that it's no longer the single point of failure in your big data solution or cloud data integration with Data Factory.

Box 2: lowered We see:

Concurrent Jobs (Running/Limit): 2/14 CPU Utilization: 6%

Note: When the processor and available RAM aren't well utilized, but the execution of concurrent jobs reaches a node's limits, scale up by increasing the number of concurrent jobs that a node can run

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

Does this meet the goal?

Question 10

- (Exam Topic 3)

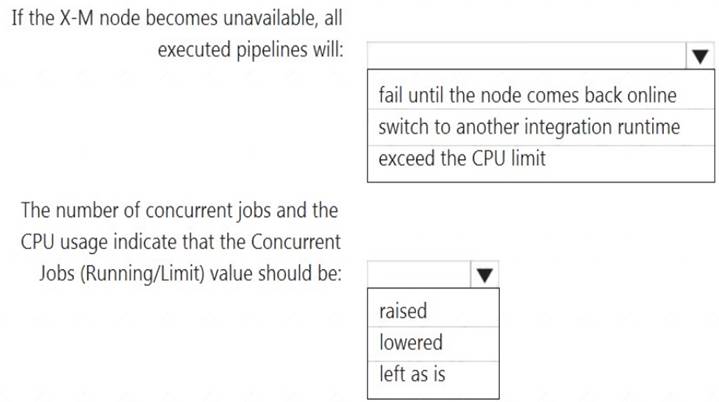

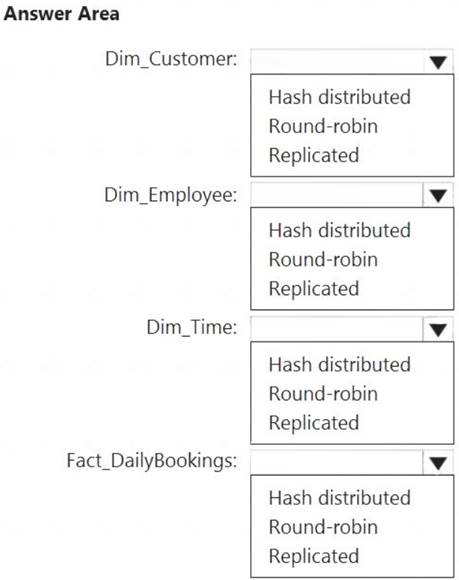

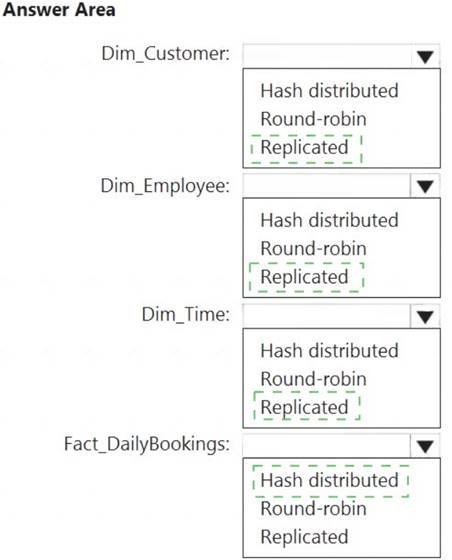

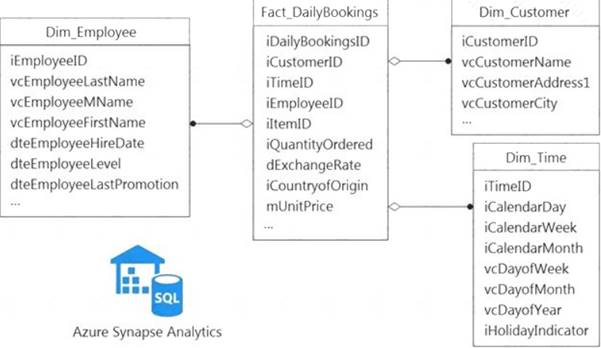

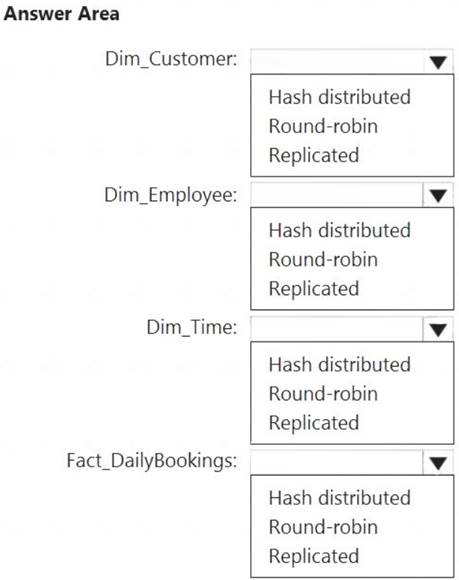

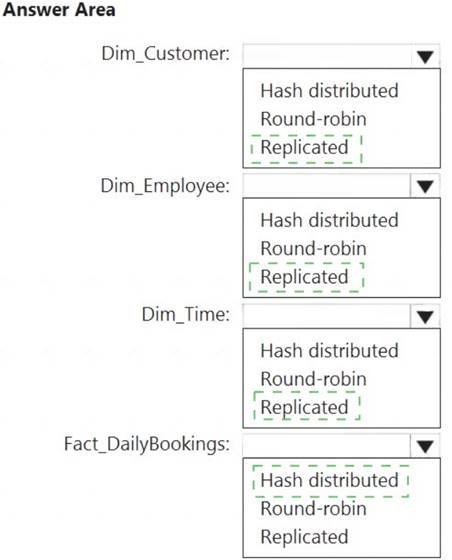

You have a data model that you plan to implement in a data warehouse in Azure Synapse Analytics as shown in the following exhibit.

All the dimension tables will be less than 2 GB after compression, and the fact table will be approximately 6 TB.

Which type of table should you use for each table? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

You have a data model that you plan to implement in a data warehouse in Azure Synapse Analytics as shown in the following exhibit.

All the dimension tables will be less than 2 GB after compression, and the fact table will be approximately 6 TB.

Which type of table should you use for each table? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

Question 11

- (Exam Topic 3)

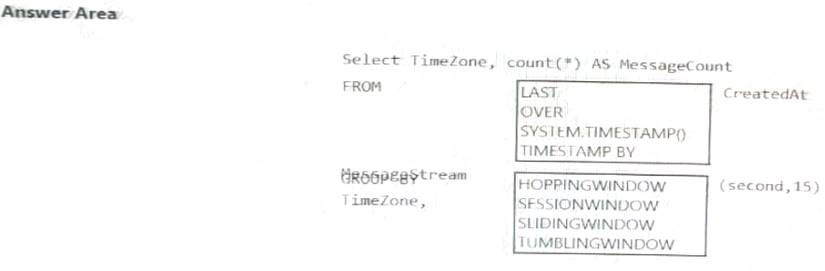

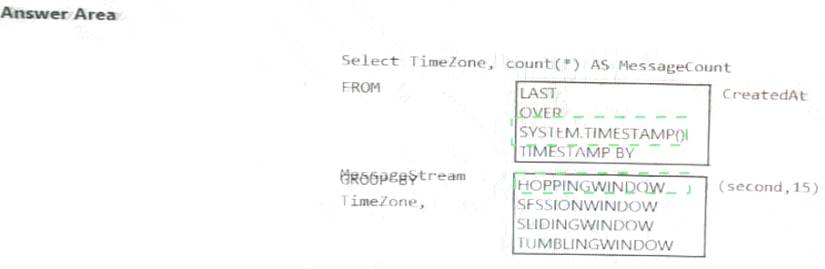

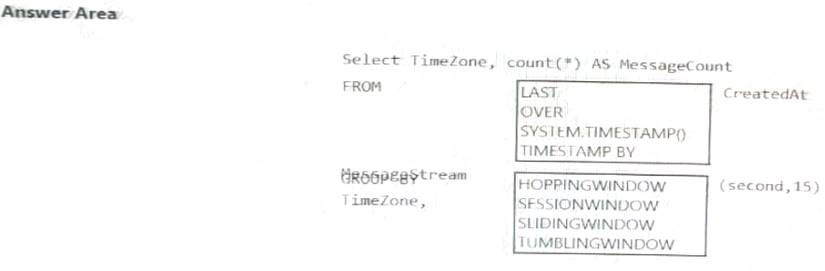

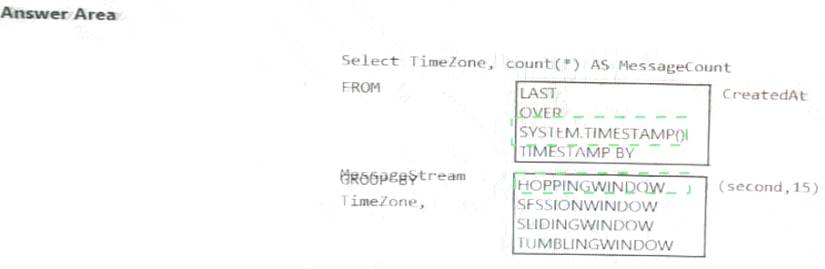

You are designing an Azure Stream Analytics solution that receives instant messaging data from an Azure event hub.

You need to ensure that the output from the Stream Analytics job counts the number of messages per time

zone every 15 seconds.

How should you complete the Stream Analytics query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

You are designing an Azure Stream Analytics solution that receives instant messaging data from an Azure event hub.

You need to ensure that the output from the Stream Analytics job counts the number of messages per time

zone every 15 seconds.

How should you complete the Stream Analytics query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

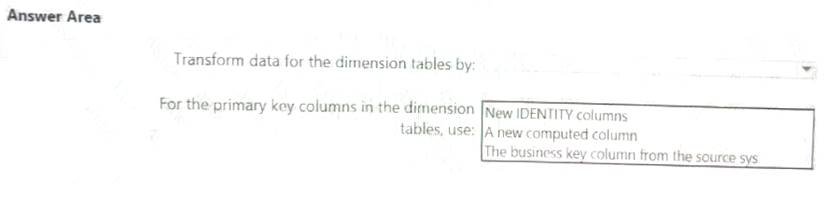

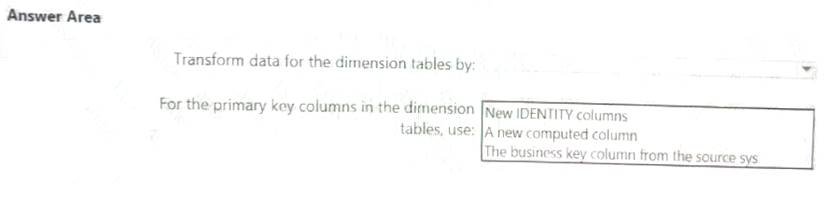

Question 12

- (Exam Topic 3)

You have a Microsoft SQL Server database that uses a third normal form schema.

You plan to migrate the data in the database to a star schema in an A?ire Synapse Analytics dedicated SQI pool.

You need to design the dimension tables. The solution must optimize read operations.

What should you include in the solution? to answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

You have a Microsoft SQL Server database that uses a third normal form schema.

You plan to migrate the data in the database to a star schema in an A?ire Synapse Analytics dedicated SQI pool.

You need to design the dimension tables. The solution must optimize read operations.

What should you include in the solution? to answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

Question 13

- (Exam Topic 3)

You have several Azure Data Factory pipelines that contain a mix of the following types of activities.

* Wrangling data flow

* Notebook

* Copy

* jar

Which two Azure services should you use to debug the activities? Each correct answer presents part of the solution NOTE: Each correct selection is worth one point.

You have several Azure Data Factory pipelines that contain a mix of the following types of activities.

* Wrangling data flow

* Notebook

* Copy

* jar

Which two Azure services should you use to debug the activities? Each correct answer presents part of the solution NOTE: Each correct selection is worth one point.

Question 14

- (Exam Topic 3)

You have an Azure Databricks workspace named workspace1 in the Standard pricing tier.

You need to configure workspace1 to support autoscaling all-purpose clusters. The solution must meet the following requirements:

Automatically scale down workers when the cluster is underutilized for three minutes.

Automatically scale down workers when the cluster is underutilized for three minutes.

Minimize the time it takes to scale to the maximum number of workers.

Minimize the time it takes to scale to the maximum number of workers.

Minimize costs.

Minimize costs.

What should you do first?

You have an Azure Databricks workspace named workspace1 in the Standard pricing tier.

You need to configure workspace1 to support autoscaling all-purpose clusters. The solution must meet the following requirements:

Automatically scale down workers when the cluster is underutilized for three minutes.

Automatically scale down workers when the cluster is underutilized for three minutes.  Minimize the time it takes to scale to the maximum number of workers.

Minimize the time it takes to scale to the maximum number of workers. Minimize costs.

Minimize costs.What should you do first?

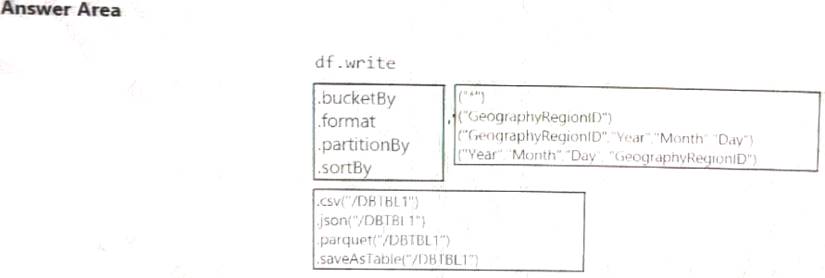

Question 15

- (Exam Topic 3)

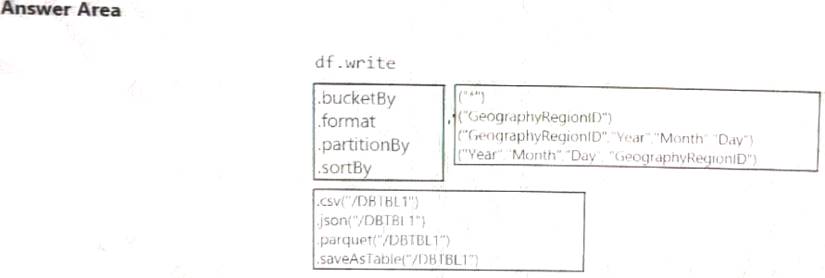

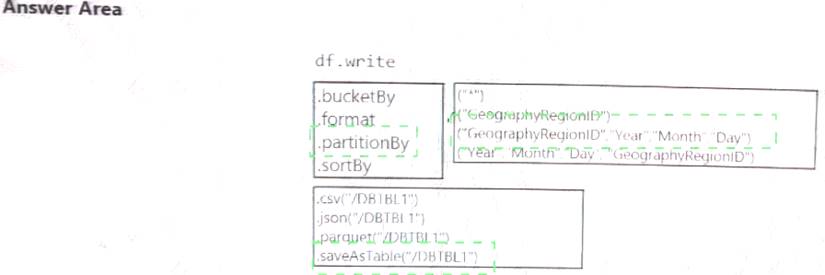

You develop a dataset named DBTBL1 by using Azure Databricks. DBTBL1 contains the following columns:

• SensorTypelD

• GeographyRegionID

• Year

• Month

• Day

• Hour

• Minute

• Temperature

• WindSpeed

• Other

You need to store the data to support daily incremental load pipelines that vary for each GeographyRegionID. The solution must minimize storage costs.

How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

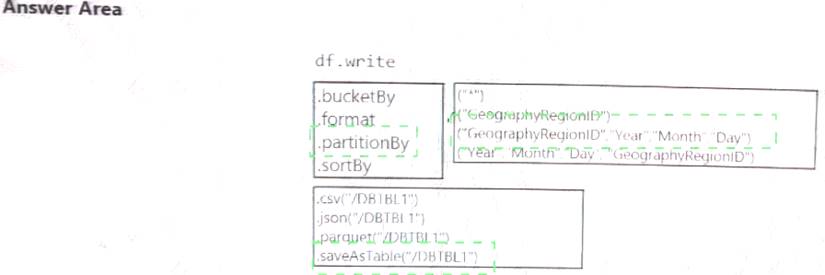

You develop a dataset named DBTBL1 by using Azure Databricks. DBTBL1 contains the following columns:

• SensorTypelD

• GeographyRegionID

• Year

• Month

• Day

• Hour

• Minute

• Temperature

• WindSpeed

• Other

You need to store the data to support daily incremental load pipelines that vary for each GeographyRegionID. The solution must minimize storage costs.

How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Does this meet the goal?

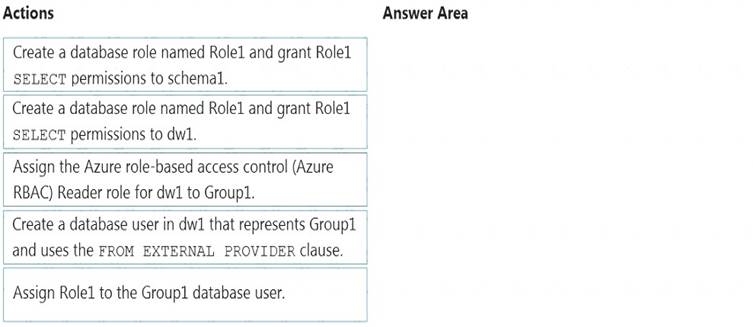

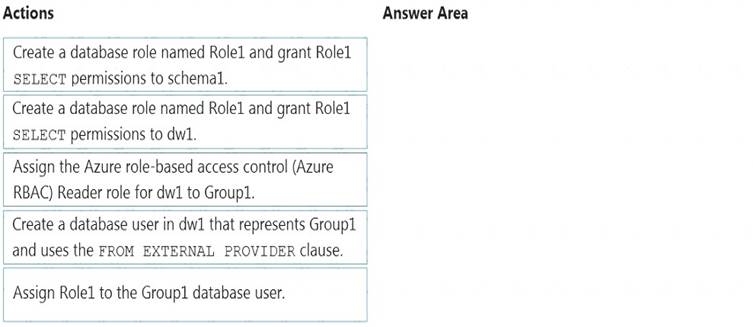

Question 16

- (Exam Topic 3)

You have an Azure Active Directory (Azure AD) tenant that contains a security group named Group1. You have an Azure Synapse Analytics dedicated SQL pool named dw1 that contains a schema named schema1.

You need to grant Group1 read-only permissions to all the tables and views in schema1. The solution must use the principle of least privilege.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Solution:

Step 1: Create a database role named Role1 and grant Role1 SELECT permissions to schema You need to grant Group1 read-only permissions to all the tables and views in schema1.

Place one or more database users into a database role and then assign permissions to the database role. Step 2: Assign Rol1 to the Group database user

Step 3: Assign the Azure role-based access control (Azure RBAC) Reader role for dw1 to Group1 Reference:

https://docs.microsoft.com/en-us/azure/data-share/how-to-share-from-sql

Does this meet the goal?

You have an Azure Active Directory (Azure AD) tenant that contains a security group named Group1. You have an Azure Synapse Analytics dedicated SQL pool named dw1 that contains a schema named schema1.

You need to grant Group1 read-only permissions to all the tables and views in schema1. The solution must use the principle of least privilege.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Solution:

Step 1: Create a database role named Role1 and grant Role1 SELECT permissions to schema You need to grant Group1 read-only permissions to all the tables and views in schema1.

Place one or more database users into a database role and then assign permissions to the database role. Step 2: Assign Rol1 to the Group database user

Step 3: Assign the Azure role-based access control (Azure RBAC) Reader role for dw1 to Group1 Reference:

https://docs.microsoft.com/en-us/azure/data-share/how-to-share-from-sql

Does this meet the goal?

Question 17

- (Exam Topic 3)

You have an Azure subscription that contains the following resources:

* An Azure Active Directory (Azure AD) tenant that contains a security group named Group1.

* An Azure Synapse Analytics SQL pool named Pool1.

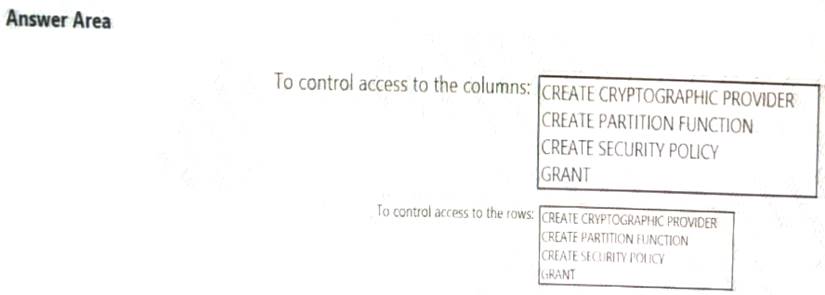

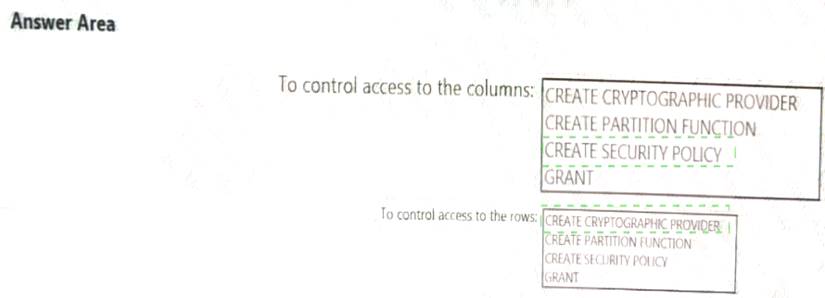

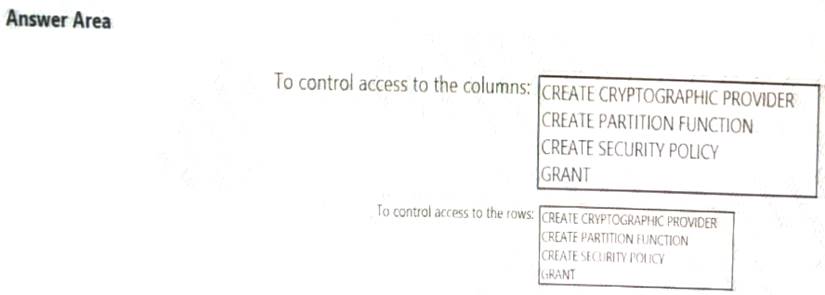

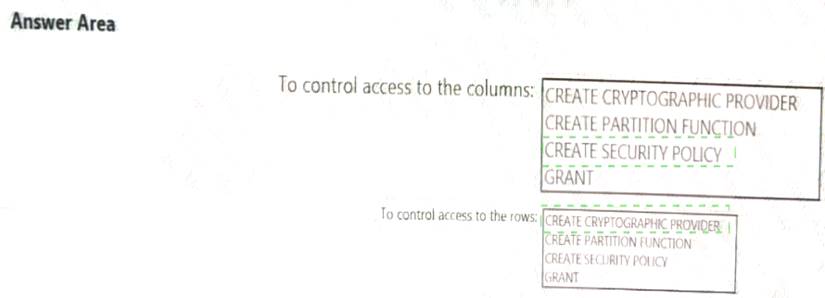

You need to control the access of Group1 to specific columns and rows in a table in Pool1

Which Transact-SQL commands should you use? To answer, select the appropriate options in the answer area. NOTE: Each appropriate options in the answer area.

Solution:

Does this meet the goal?

You have an Azure subscription that contains the following resources:

* An Azure Active Directory (Azure AD) tenant that contains a security group named Group1.

* An Azure Synapse Analytics SQL pool named Pool1.

You need to control the access of Group1 to specific columns and rows in a table in Pool1

Which Transact-SQL commands should you use? To answer, select the appropriate options in the answer area. NOTE: Each appropriate options in the answer area.

Solution:

Does this meet the goal?

Question 18

- (Exam Topic 3)

You are designing a partition strategy for a fact table in an Azure Synapse Analytics dedicated SQL pool. The table has the following specifications:

• Contain sales data for 20,000 products.

• Use hash distribution on a column named ProduclID,

• Contain 2.4 billion records for the years 20l9 and 2020.

Which number of partition ranges provides optimal compression and performance of the clustered columnstore index?

You are designing a partition strategy for a fact table in an Azure Synapse Analytics dedicated SQL pool. The table has the following specifications:

• Contain sales data for 20,000 products.

• Use hash distribution on a column named ProduclID,

• Contain 2.4 billion records for the years 20l9 and 2020.

Which number of partition ranges provides optimal compression and performance of the clustered columnstore index?