23 July, 2022

Practical Amazon-Web-Services DOP-C01 Free Draindumps Online

We provide real DOP-C01 exam questions and answers braindumps in two formats. Download PDF & Practice Tests. Pass Amazon-Web-Services DOP-C01 Exam quickly & easily. The DOP-C01 PDF type is available for reading and printing. You can print more and practice many times. With the help of our Amazon-Web-Services DOP-C01 dumps pdf and vce product and material, you can easily pass the DOP-C01 exam.

Online DOP-C01 free questions and answers of New Version:

Question 1

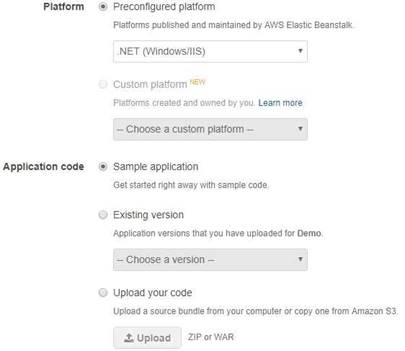

Your development team use .Net to code their web application. They want to deploy it to AWS for the purpose of continuous integration and deployment. The application code is hosted in a Git repository. Which of the following combination of steps can be used to fulfil this requirement. Choose 2 answers from the options given below

Question 2

You have a set of EC2 instances hosted in AWS. You have created a role named DemoRole and assigned that role to a policy, but you are unable to use that role with an instance. Why is this the case.

Question 3

Which of the following Cloudformation helper scripts can help install packages on EC2 resources

Question 4

You are administering a continuous integration application that polls version control for changes and then launches new Amazon EC2 instances for a full suite of build tests. What should you do to ensure the lowest overall cost while being able to run as many tests in parallel as possible?

Question 5

Which of the following services can be used to implement DevOps in your company.

Question 6

Which of the following commands for the elastic beanstalk CLI can be used to create the current application into the specified environment?

Question 7

Your company has developed a web application and is hosting it in an Amazon S3 bucket configured for static website hosting. The application is using the AWS SDK for JavaScript in the browser to access data stored in an Amazon DynamoDB table. How can you ensure that API keys for access to your data in DynamoDB are kept secure?

Question 8

Your company is using an Autoscaling Group to scale out and scale in instances. There is an expectation of a peak in traffic every Monday at 8am. The traffic is then expected to come down before the weekend on Friday 5pm. How should you configure Autoscaling in this?

Question 9

You are managing the development of an application that uses DynamoDB to store JSON data. You have already set the Read and Write capacity of the DynamoDB table. You are unsure of the amount of the traffic that will be received by the application during the deployment time. How can you ensure that the DynamoDB is not highly throttled and does not become a bottleneck for the application? Choose 2 answers from the options below.

Question 10

There is a company website that is going to be launched in the coming weeks. There is a probability that the traffic will be quite high in the first couple of weeks. I n the event of a load failure, how can you set up DNS failover to a static website? Choose the correct answer from the options given below.

Question 11

Your IT company is currently hosting a production environment in Elastic beanstalk. You understand that the Elastic beanstalk service provides a facility known as Managed updates which are minor and patch version updates which are periodically required for your system. Your IT supervisor is worried about the impact that these updates would have on the system. What can you tell about the Elastic beanstalk service with regards to managed updates

Question 12

Your company has recently extended its datacenter into a VPC on AWS. There is a requirement for on-premise users manage AWS resources from the AWS console. You don't want to create 1AM users for them again. Which of the below options will fit your needs for authentication?

Question 13

You are hired as the new head of operations for a SaaS company. Your CTO has asked you to make debugging any part of your entire operation simpler and as fast as possible. She complains that she has no idea what is going on in the complex, service-oriented architecture, because the developers just log to disk, and it's very hard to find errors in logs on so many services. How can you best meet this requirement and satisfy your CTO?

Question 14

You have instances running on your VPC. You have both production and development based instances running in the VPC. You want to ensure that people who are responsible for the development instances don't have the access to work on the production instances to ensure better security. Using policies, which of the following would be the best way to accomplish this? Choose the correct answer from the options given below

Question 15

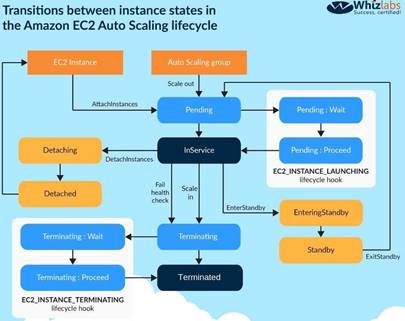

You have an Auto Scaling group of Instances that processes messages from an Amazon Simple Queue Service (SQS) queue. The group scales on the size of the queue. Processing Involves calling a third- party web service. The web service is complaining about the number of failed and repeated calls it is receiving from you. You have noticed that when the group scales in, instances are being terminated while they are processing. What cost-effective solution can you use to reduce the number of incomplete process attempts?

Question 16

You are doing a load testing exercise on your application hosted on AWS. While testing your Amazon RDS MySQL DB instance, you notice that when you hit 100% CPU utilization on it, your application becomes non- responsive. Your application is read-heavy. What are methods to scale your data tier to meet the application's needs? Choose three answers from the options given below

Question 17

You use Amazon Cloud Watch as your primary monitoring system for your web application. After a

recent software deployment, your users are getting Intermittent 500 Internal Server Errors when using the web application. You want to create a Cloud Watch alarm, and notify an on-call engineer when these occur. How can you accomplish this using AWS services? Choose three answers from the options given below

recent software deployment, your users are getting Intermittent 500 Internal Server Errors when using the web application. You want to create a Cloud Watch alarm, and notify an on-call engineer when these occur. How can you accomplish this using AWS services? Choose three answers from the options given below

Question 18

An enterprise wants to use a third-party SaaS application running on AWS.. The SaaS application needs to have access to issue several API commands to discover Amazon EC2 resources running within the enterprise's account. The enterprise has internal security policies that require any outside access to their environment must conform to the principles of least privilege and there must be controls in place to ensure that the credentials used by the SaaS vendor cannot be used by any other third party. Which of the following would meet all of these conditions?

Question 19

You need to deploy an AWS stack in a repeatable manner across multiple environments. You have selected CloudFormation as the right tool to accomplish this, but have found that there is a resource type you need to create and model, but is unsupported by CloudFormation. How should you overcome this challenge?

Question 20

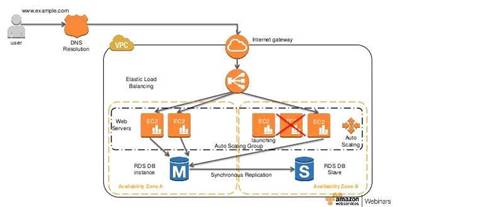

Your company currently has a set of EC2 Instances running a web application which sits behind an Elastic Load Balancer. You also have an Amazon RDS instance which is used by the web application. You have been asked to ensure that this arhitecture is self healing in nature and cost effective. Which of the following would fulfil this requirement. Choose 2 answers from the option given below

Question 21

You have been asked to de-risk deployments at your company. Specifically, the CEO is concerned about outages that occur because of accidental inconsistencies between Staging and Production, which sometimes cause unexpected behaviors in Production even when Staging tests pass. You already use Docker to get high consistency between Staging and Production for the application environment on your EC2 instances. How do you further de-risk the rest of the execution environment, since in AWS, there are many service components you may use beyond EC2 virtual machines?

Question 22

You have launched a cloudformation template, but are receiving a failure notification after the template was launched. What is the default behavior of Cloudformation in such a case

Question 23

Your company is hosting an application in AWS. The application consists of a set of web servers and AWS RDS. The application is a read intensive application. It has been noticed that the response time of the application decreases due to the load on the AWS RDS instance. Which of the following measures can be taken to scale the data tier. Choose 2 answers from the options given below

Question 24

Your CTO has asked you to make sure that you know what all users of your AWS account are doing to change resources at all times. She wants a report of who is doing what over time, reported to her once per week, for as broad a resource type group as possible. How should you do this?

Question 25

Which of the following CLI commands is used to spin up new EC2 Instances?