22 June, 2021

The Refresh Guide To AWS-Certified-Security-Specialty Pdf

We provide real AWS-Certified-Security-Specialty exam questions and answers braindumps in two formats. Download PDF & Practice Tests. Pass Amazon AWS-Certified-Security-Specialty Exam quickly & easily. The AWS-Certified-Security-Specialty PDF type is available for reading and printing. You can print more and practice many times. With the help of our Amazon AWS-Certified-Security-Specialty dumps pdf and vce product and material, you can easily pass the AWS-Certified-Security-Specialty exam.

Amazon AWS-Certified-Security-Specialty Free Dumps Questions Online, Read and Test Now.

Question 1

You are planning on hosting a web application on AWS. You create an EC2 Instance in a public subnet. This instance needs to connect to an EC2 Instance that will host an Oracle database. Which of the following steps should be followed to ensure a secure setup is in place? Select 2 answers.

Please select:

Please select:

Question 2

Your company has the following setup in AWS

Question 3

When you enable automatic key rotation for an existing CMK key where the backing key is managed by AWS, after how long is the key rotated?

Please select:

Please select:

Question 4

Your team is experimenting with the API gateway service for an application. There is a need to implement a custom module which can be used for authentication/authorization for calls made to the API gateway. How can this be achieved?

Please select:

Please select:

Question 5

In your LAMP application, you have some developers that say they would like access to your logs. However, since you are using an AWS Auto Scaling group, your instances are constantly being recreated.

What would you do to make sure that these developers can access these log files? Choose the correct answer from the options below

Please select:

What would you do to make sure that these developers can access these log files? Choose the correct answer from the options below

Please select:

Question 6

Your company has a hybrid environment, with on-premise servers and servers hosted in the AWS cloud. They are planning to use the Systems Manager for patching servers. Which of the following is a pre-requisite for this to work;

Please select:

Please select:

Question 7

You need to create a policy and apply it for just an individual user. How could you accomplish this in the right way?

Please select:

Please select:

Question 8

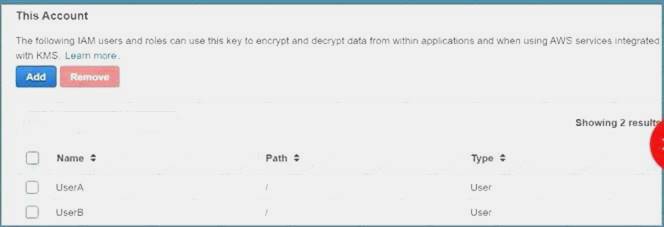

Your developer is using the KMS service and an assigned key in their Java program. They get the below error when

running the code

arn:aws:iam::113745388712:user/UserB is not authorized to perform: kms:DescribeKey Which of the following could help resolve the issue?

Please select:

running the code

arn:aws:iam::113745388712:user/UserB is not authorized to perform: kms:DescribeKey Which of the following could help resolve the issue?

Please select:

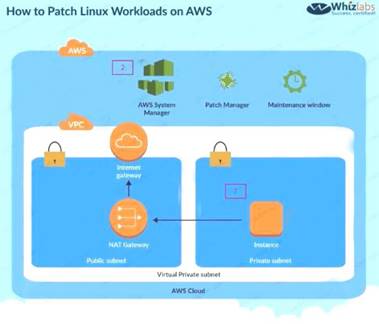

Question 9

You have a set of 100 EC2 Instances in an AWS account. You need to ensure that all of these instances are patched and kept to date. All of the instances are in a private subnet. How can you achieve this. Choose 2 answers from the options given below

Please select:

Please select:

Question 10

You have an instance setup in a test environment in AWS. You installed the required application and the promoted the server to a production environment. Your IT Security team has advised that there maybe traffic flowing in from an unknown IP address to port 22. How can this be mitigated immediately?

Please select:

Please select:

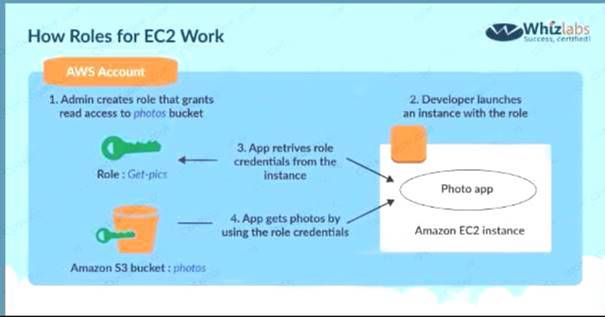

Question 11

An application is designed to run on an EC2 Instance. The applications needs to work with an S3 bucket. From a security perspective , what is the ideal way for the EC2 instance/ application to be configured?

Please select:

Please select:

Question 12

Your IT Security team has identified a number of vulnerabilities across critical EC2 Instances in the company's AWS Account. Which would be the easiest way to ensure these vulnerabilities are remediated?

Please select:

Please select:

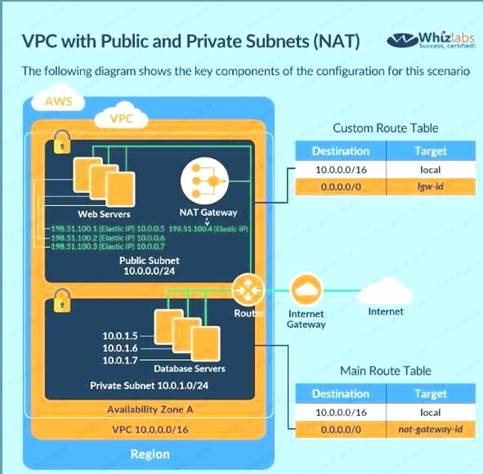

Question 13

Your current setup in AWS consists of the following architecture. 2 public subnets, one subnet which has the web servers accessed by users across the internet and the other subnet for the database server. Which of the following changes to the architecture would add a better security boundary to the resources hosted in your setup

Please select:

Please select:

Question 14

You have a requirement to serve up private content using the keys available with Cloudfront. How can this be achieved?

Please select:

Please select:

Question 15

A company has a set of resources defined in AWS. It is mandated that all API calls to the resources be monitored. Also all API calls must be stored for lookup purposes. Any log data greater than 6 months must be archived. Which of the following meets these requirements? Choose 2 answers from the options given below. Each answer forms part of the solution.

Please select:

Please select:

Question 16

In order to encrypt data in transit for a connection to an AWS RDS instance, which of the following would you implement

Please select:

Please select:

Question 17

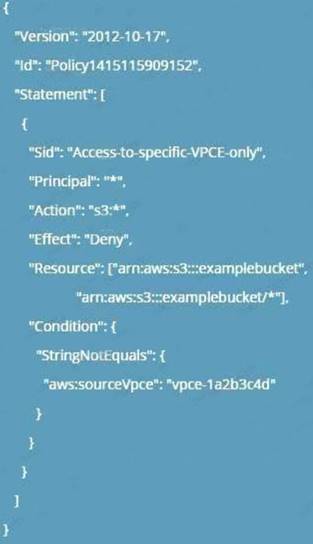

You have a bucket and a VPC defined in AWS. You need to ensure that the bucket can only be accessed by the VPC endpoint. How can you accomplish this?

Please select:

Please select:

Question 18

You are hosting a web site via website hosting on an S3 bucket - http://demo.s3-website-us-east-l

.amazonaws.com. You have some web pages that use Javascript that access resources in another bucket which has web site hosting also enabled. But when users access the web pages , they are getting a blocked Javascript error. How can you rectify this?

Please select:

.amazonaws.com. You have some web pages that use Javascript that access resources in another bucket which has web site hosting also enabled. But when users access the web pages , they are getting a blocked Javascript error. How can you rectify this?

Please select:

Question 19

Your company has an EC2 Instance hosted in AWS. This EC2 Instance hosts an application. Currently this application is experiencing a number of issues. You need to inspect the network packets to see what the type of error that is occurring? Which one of the below steps can help address this issue? Please select: