15 November, 2023

Verified Microsoft AI-100 Exam Online

Cause all that matters here is passing the Microsoft AI-100 exam. Cause all that you need is a high score of AI-100 Designing and Implementing an Azure AI Solution exam. The only one thing you need to do is downloading Exambible AI-100 exam study guides now. We will not let you down with our money-back guarantee.

Microsoft AI-100 Free Dumps Questions Online, Read and Test Now.

Question 1

- (Exam Topic 2)

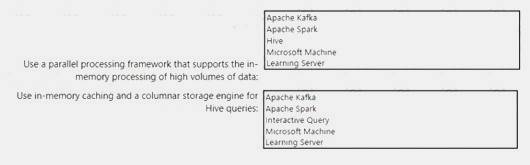

You are designing an Al solution that must meet the following processing requirements:

• Use a parallel processing framework that supports the in-memory processing of high volumes of data.

• Use in-memory caching and a columnar storage engine for Apache Hive queries.

What should you use to meet each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Box 1: Apache Spark

Apache Spark is a parallel processing framework that supports in-memory processing to boost the performance of big-data analytic applications. Apache Spark in Azure HDInsight is the Microsoft implementation of Apache Spark in the cloud.

Box 2: Interactive Query

Interactive Query provides In-memory caching and improved columnar storage engine for Hive queries. References:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-overview https://docs.microsoft.com/bs-latn-ba/azure/hdinsight/interactive-query/apache-interactive-query-get-started

Does this meet the goal?

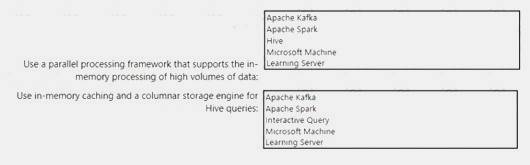

You are designing an Al solution that must meet the following processing requirements:

• Use a parallel processing framework that supports the in-memory processing of high volumes of data.

• Use in-memory caching and a columnar storage engine for Apache Hive queries.

What should you use to meet each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Box 1: Apache Spark

Apache Spark is a parallel processing framework that supports in-memory processing to boost the performance of big-data analytic applications. Apache Spark in Azure HDInsight is the Microsoft implementation of Apache Spark in the cloud.

Box 2: Interactive Query

Interactive Query provides In-memory caching and improved columnar storage engine for Hive queries. References:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-overview https://docs.microsoft.com/bs-latn-ba/azure/hdinsight/interactive-query/apache-interactive-query-get-started

Does this meet the goal?

Question 2

- (Exam Topic 2)

You are developing an application that will perform optical character recognition of photos of medical logbooks. You need to recommend a solution to validate the data against a validated set of records. Which service should you include in the recommendation?

You are developing an application that will perform optical character recognition of photos of medical logbooks. You need to recommend a solution to validate the data against a validated set of records. Which service should you include in the recommendation?

Question 3

- (Exam Topic 2)

You plan to implement a new data warehouse for a planned AI solution. You have the following information regarding the data warehouse:

•The data files will be available in one week.

•Most queries that will be executed against the data warehouse will be ad-hoc queries.

•The schemas of data files that will be loaded to the data warehouse will change often.

•One month after the planned implementation, the data warehouse will contain 15 TB of data. You need to recommend a database solution to support the planned implementation.

What two solutions should you include in the recommendation? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

You plan to implement a new data warehouse for a planned AI solution. You have the following information regarding the data warehouse:

•The data files will be available in one week.

•Most queries that will be executed against the data warehouse will be ad-hoc queries.

•The schemas of data files that will be loaded to the data warehouse will change often.

•One month after the planned implementation, the data warehouse will contain 15 TB of data. You need to recommend a database solution to support the planned implementation.

What two solutions should you include in the recommendation? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

Question 4

- (Exam Topic 2)

You have Azure loT Edge devices that collect measurements every 30 seconds. You plan to send the measurements to an Azure loT hub. You need to ensure that every event is processed as quickly as possible. What should you use?

You have Azure loT Edge devices that collect measurements every 30 seconds. You plan to send the measurements to an Azure loT hub. You need to ensure that every event is processed as quickly as possible. What should you use?

Question 5

- (Exam Topic 2)

You plan to design a solution for an Al implementation that uses data from loT devices.

You need to recommend a data storage solution for the loT devices that meets the following requirements:

•Allow data to be queried in real-time as it streams into the solution.

•Provide the lowest amount of latency for loading data into the solution. What should you include in the recommendation?

You plan to design a solution for an Al implementation that uses data from loT devices.

You need to recommend a data storage solution for the loT devices that meets the following requirements:

•Allow data to be queried in real-time as it streams into the solution.

•Provide the lowest amount of latency for loading data into the solution. What should you include in the recommendation?

Question 6

- (Exam Topic 2)

Your company recently deployed several hardware devices that contain sensors.

The sensors generate new data on an hourly basis. The data generated is stored on-premises and retained for several years.

During the past two months, the sensors generated 300 GB of data.

You plan to move the data to Azure and then perform advanced analytics on the data. You need to recommend an Azure storage solution for the data.

Which storage solution should you recommend?

Your company recently deployed several hardware devices that contain sensors.

The sensors generate new data on an hourly basis. The data generated is stored on-premises and retained for several years.

During the past two months, the sensors generated 300 GB of data.

You plan to move the data to Azure and then perform advanced analytics on the data. You need to recommend an Azure storage solution for the data.

Which storage solution should you recommend?

Question 7

- (Exam Topic 1)

You need to recommend a data storage solution that meets the technical requirements.

What is the best data storage solution to recommend? More than one answer choice may achieve the goal. Select the BEST answer.

You need to recommend a data storage solution that meets the technical requirements.

What is the best data storage solution to recommend? More than one answer choice may achieve the goal. Select the BEST answer.

Question 8

- (Exam Topic 1)

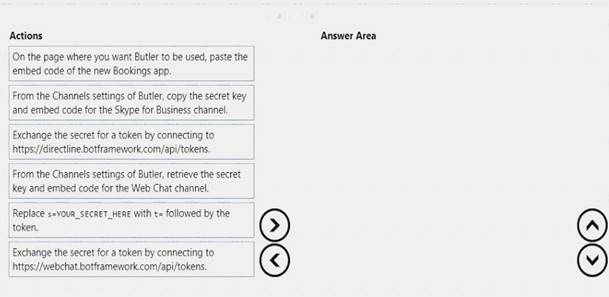

You need to integrate the new Bookings app and the Butler chatbot.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Solution:

References:

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-channel-connect-webchat?view=azure-bot-servic

Does this meet the goal?

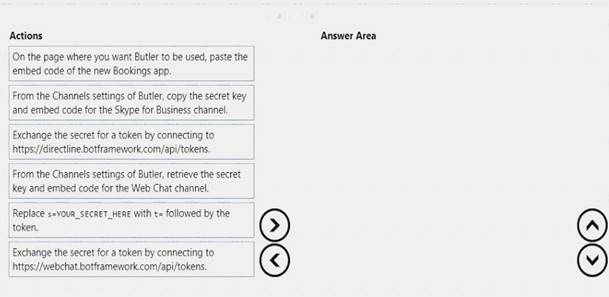

You need to integrate the new Bookings app and the Butler chatbot.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Solution:

References:

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-channel-connect-webchat?view=azure-bot-servic

Does this meet the goal?

Question 9

- (Exam Topic 2)

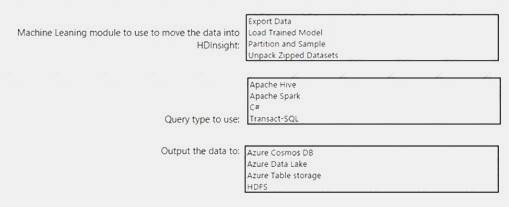

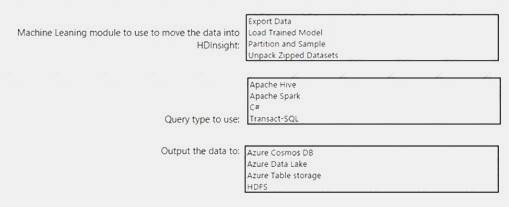

You are designing a solution that will ingest data from an Azure loT Edge device, preprocess the data in Azure Machine Learning, and then move the data to Azure HDInsight for further processing.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Box 1: Export Data

The Export data to Hive option in the Export Data module in Azure Machine Learning Studio. This option is useful when you are working with very large datasets, and want to save your machine learning experiment data to a Hadoop cluster or HDInsight distributed storage.

Box 2: Apache Hive

Apache Hive is a data warehouse system for Apache Hadoop. Hive enables data summarization, querying, and analysis of data. Hive queries are written in HiveQL, which is a query language similar to SQL.

Box 3: Azure Data Lake

Default storage for the HDFS file system of HDInsight clusters can be associated with either an Azure Storage account or an Azure Data Lake Storage.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/export-to-hive-query https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/hdinsight-use-hive

Does this meet the goal?

You are designing a solution that will ingest data from an Azure loT Edge device, preprocess the data in Azure Machine Learning, and then move the data to Azure HDInsight for further processing.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Box 1: Export Data

The Export data to Hive option in the Export Data module in Azure Machine Learning Studio. This option is useful when you are working with very large datasets, and want to save your machine learning experiment data to a Hadoop cluster or HDInsight distributed storage.

Box 2: Apache Hive

Apache Hive is a data warehouse system for Apache Hadoop. Hive enables data summarization, querying, and analysis of data. Hive queries are written in HiveQL, which is a query language similar to SQL.

Box 3: Azure Data Lake

Default storage for the HDFS file system of HDInsight clusters can be associated with either an Azure Storage account or an Azure Data Lake Storage.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/export-to-hive-query https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/hdinsight-use-hive

Does this meet the goal?

Question 10

- (Exam Topic 2)

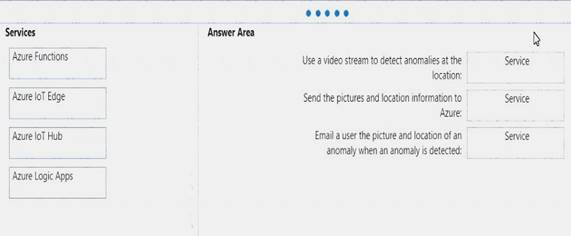

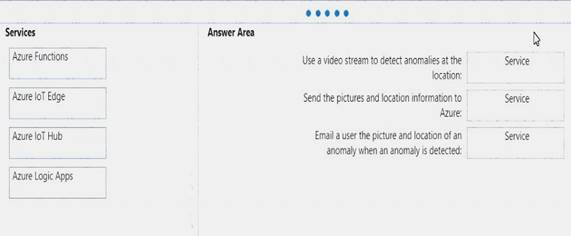

You are designing a solution that uses drones to monitor remote locations for anomalies. The drones have Azure loT Edge devices. The solution must meet the following requirements:

•Email a user the picture and location of an anomaly when an anomaly is detected.

•Use a video stream to detect anomalies at the location.

•Send the pictures and location information to Azure.

•Use the least amount of code possible.

You develop a custom vision Azure Machine Learning module to detect the anomalies.

Which service should you use for each requirement? To answer, drag the appropriate services to the correct requirements. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: Azure IOT Edge Example:

You configure the Remote Monitoring solution to respond to anomalies detected by an IoT Edge device. IoT Edge devices let you process telemetry at the edge to reduce the volume of telemetry sent to the solution and to enable faster responses to events on devices.

Box 2: Azure Functions Box 3: Azure Logic Apps References:

https://docs.microsoft.com/en-us/azure/iot-accelerators/iot-accelerators-remote-monitoring-edge

Does this meet the goal?

You are designing a solution that uses drones to monitor remote locations for anomalies. The drones have Azure loT Edge devices. The solution must meet the following requirements:

•Email a user the picture and location of an anomaly when an anomaly is detected.

•Use a video stream to detect anomalies at the location.

•Send the pictures and location information to Azure.

•Use the least amount of code possible.

You develop a custom vision Azure Machine Learning module to detect the anomalies.

Which service should you use for each requirement? To answer, drag the appropriate services to the correct requirements. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: Azure IOT Edge Example:

You configure the Remote Monitoring solution to respond to anomalies detected by an IoT Edge device. IoT Edge devices let you process telemetry at the edge to reduce the volume of telemetry sent to the solution and to enable faster responses to events on devices.

Box 2: Azure Functions Box 3: Azure Logic Apps References:

https://docs.microsoft.com/en-us/azure/iot-accelerators/iot-accelerators-remote-monitoring-edge

Does this meet the goal?

Question 11

- (Exam Topic 2)

You have thousands of images that contain text.

You need to process the text from the images into a machine-readable character stream. Which Azure Cognitive Services service should you use?

You have thousands of images that contain text.

You need to process the text from the images into a machine-readable character stream. Which Azure Cognitive Services service should you use?

Question 12

- (Exam Topic 2)

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have Azure IoT Edge devices that generate streaming data.

On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy Azure Functions as an IoT Edge module.

Does this meet the goal?

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have Azure IoT Edge devices that generate streaming data.

On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy Azure Functions as an IoT Edge module.

Does this meet the goal?

Question 13

- (Exam Topic 2)

You need to build an API pipeline that analyzes streaming data. The pipeline will perform the following:

Visual text recognition

Visual text recognition

Audio transcription

Audio transcription

Sentiment analysis

Sentiment analysis

Face detection

Face detection

Which Azure Cognitive Services should you use in the pipeline?

You need to build an API pipeline that analyzes streaming data. The pipeline will perform the following:

Visual text recognition

Visual text recognition Audio transcription

Audio transcription  Sentiment analysis

Sentiment analysis  Face detection

Face detectionWhich Azure Cognitive Services should you use in the pipeline?

Question 14

- (Exam Topic 2)

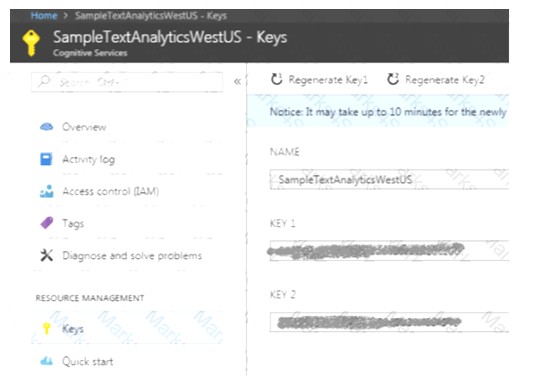

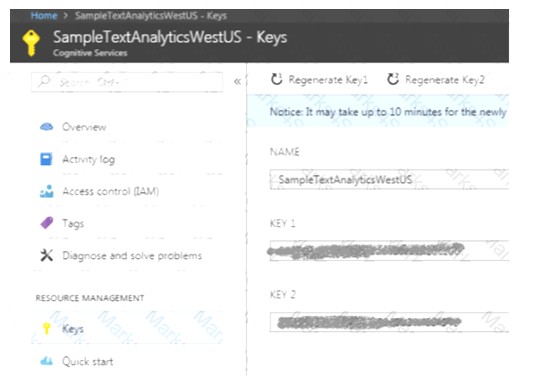

You develop a custom application that uses a token to connect to Azure Cognitive Services resources. A new security policy requires that all access keys are changed every 30 days.

You need to recommend a solution to implement the security policy.

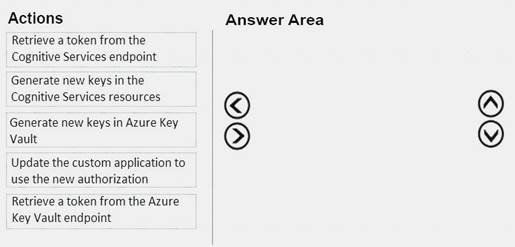

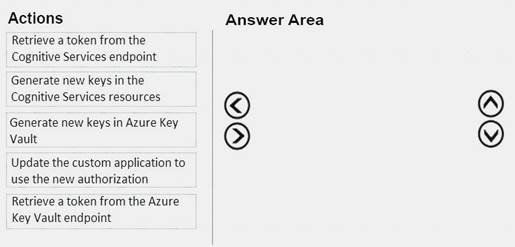

Which three actions should you recommend be performed every 30 days? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Solution:

Step 1: Generate new keys in the Cognitive Service resources

Step 2: Retrieve a token from the Cognitive Services endpoint Step 3: Update the custom application to use the new authorization

Each request to an Azure Cognitive Service must include an authentication header. This header passes along a subscription key or access token, which is used to validate your subscription for a service or group of services.

References:

https://docs.microsoft.com/en-us/azure/cognitive-services/authentication

Does this meet the goal?

You develop a custom application that uses a token to connect to Azure Cognitive Services resources. A new security policy requires that all access keys are changed every 30 days.

You need to recommend a solution to implement the security policy.

Which three actions should you recommend be performed every 30 days? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Solution:

Step 1: Generate new keys in the Cognitive Service resources

Step 2: Retrieve a token from the Cognitive Services endpoint Step 3: Update the custom application to use the new authorization

Each request to an Azure Cognitive Service must include an authentication header. This header passes along a subscription key or access token, which is used to validate your subscription for a service or group of services.

References:

https://docs.microsoft.com/en-us/azure/cognitive-services/authentication

Does this meet the goal?

Question 15

- (Exam Topic 2)

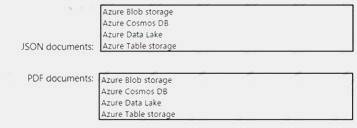

You need to build a sentiment analysis solution that will use input data from JSON documents and PDF documents. The JSON documents must be processed in batches and aggregated.

Which storage type should you use for each file type? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

References:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/big-data/batch-processing

Does this meet the goal?

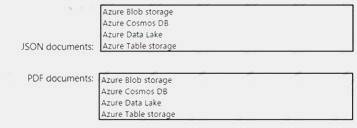

You need to build a sentiment analysis solution that will use input data from JSON documents and PDF documents. The JSON documents must be processed in batches and aggregated.

Which storage type should you use for each file type? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

References:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/big-data/batch-processing

Does this meet the goal?

Question 16

- (Exam Topic 2)

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create several AI models in Azure Machine Learning Studio. You deploy the models to a production environment.

You need to monitor the compute performance of the models. Solution: You write a custom scoring script.

Does this meet the goal?

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create several AI models in Azure Machine Learning Studio. You deploy the models to a production environment.

You need to monitor the compute performance of the models. Solution: You write a custom scoring script.

Does this meet the goal?

Question 17

- (Exam Topic 1)

Which RBAC role should you assign to the KeyManagers group?

Which RBAC role should you assign to the KeyManagers group?

Question 18

- (Exam Topic 2)

Your company plans to implement an Al solution that will analyse data from loT devices.

Data from the devices will be analysed in real time. The results of the analysis will be stored in a SQL database.

You need to recommend a data processing solution that uses the Transact-SQL language. Which data processing solution should you recommend?

Your company plans to implement an Al solution that will analyse data from loT devices.

Data from the devices will be analysed in real time. The results of the analysis will be stored in a SQL database.

You need to recommend a data processing solution that uses the Transact-SQL language. Which data processing solution should you recommend?

Question 19

- (Exam Topic 2)

You are designing a solution that will use the Azure Content Moderator service to moderate user-generated content.

You need to moderate custom predefined content without repeatedly scanning the collected content. Which API should you use?

You are designing a solution that will use the Azure Content Moderator service to moderate user-generated content.

You need to moderate custom predefined content without repeatedly scanning the collected content. Which API should you use?

Question 20

- (Exam Topic 2)

You are designing an AI solution that will analyze millions of pictures.

You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?

You are designing an AI solution that will analyze millions of pictures.

You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?